Efficiently Handle Large task with Ease: A Guide to Async Generator Functions for Batch Processing

Introduction to Async Generator Functions for Efficient Batch Processing

Batch process is a common task when we are working with a large dataset and especially when every single unit of the process is time-consuming. Async generator functions in JavaScript offer an efficient way to process huge datasets asynchronously, which can enhance performance and use less time to finish the overall job.

Memory consumption, on the other hand, might be increased as multiple operations execute concurrently depending on the process.

Async generator functions are a combination of two concepts: async functions and generator functions. So let's check the generator function first with a simple example before moving to the async generator function.

Generator function

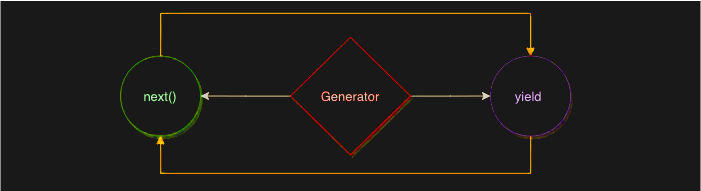

The function* syntax is used to define generator functions rather than the usual function syntax. The yield keyword is used within the generator function to pause the function and return a value to the caller. When you're ready to resume function execution, call the next() method on the iterator object to resume execution where it left off.

The generator object contains the next method, which returns another object with the property value and ``done.

value will contain the returned value, whereas done will be a boolean that, if true, will end the iteration.

In this case, the createSequence function is an endless sequence generator, which means that no matter how many times you call the next method, it will always return a value and the done property will always be false.

function* createSequence() {

let i = 0;

while (true) {

yield i++;

}

}

const generator = createSequence();

console.log(generator.next()); // { value: 1, done: false }

console.log(generator.next()); // { value: 2, done: false }

console.log(generator.next()); // { value: 3, done: false }

Let's take the previous example and apply a maximum limit to construct a sequence. In the next example, we set the maximum limit to 2, so that if you call the next method a third time, the value is undefined and the done property returns a false value.

function* createSequenceUpTo(n) {

for (let i = 1; i <= n; i++) {

yield i;

}

}

const generator = createSequenceUpTo(2);

console.log(generator.next()); // { value: 1, done: false }

console.log(generator.next()); // { value: 2, done: false }

console.log(generator.next()); // { value: undefined, done: true }

Understand the "Done" attributes usability

If we insert the generator function from the previous example into the for loop, the for loop would automatically stop after two iterations since we have only yielded the generator function two times, which is how the "done" attribute returned by the next function is beneficial in loops.

function* createSequenceUpTo(n) {

for (let i = 1; i <= n; i++) {

yield i;

}

}

for(const number of createSequenceUpTo(2)) {

console.log(number)

}

Async generator function

So far, we've figured out how the generator function works. To spice things up, let's add the Async keywords to the generator function.

The async generator method returns an asynchronous generator. Asynchronous generators provide asynchronous iteration, which means they can produce data without blocking.

Since we are using dealing with asynchronous generators, we have to use for loop followed by the await keyword. The necessity for await... comes when the computation of the current iteration on an asynchronous iterator is dependent on some of the previous iterations.

In simpler terms, the for await loop is iterating over the async generator function, and the next function will not be iterated until the promise of the current execution is fulfilled.

async function simulateDelay(value, delay) {

return new Promise((resolve) => setTimeout(() => resolve(value), delay));

}

async function* generateNumbers(n) {

for (let i = 0; i < n; i++) {

yield simulateDelay(i, 1000);

}

}

(async function () {

for await (const number of generateNumbers(5)) {

console.log(number);

}

})();

Batch Processing with Async Generator Functions

Let's look at how we can use async generator functions for batch processing now.

A big dataset is divided into manageable chunks during batch processing, and then each chunk is handled separately.

In order to create batch processing with async generator functions, we can change our generator function to produce a batch of values rather than just one.

Here's an example:

async function* generateBatches(data, batchSize) {

for (let i = 0; i < data.length; i += batchSize) {

yield data.slice(i, i + batchSize);

}

}

(async function() {

for await (const batch of generateBatches(data, 100)) {

await processBatch(batch);

}

})();

A complete example of an Async Batch Process

postIds - List of random post Ids that we want to post to the server for the process

longRunningProcess - Assume a long-running process like posting data to the server

tasks - List of longRunningProcess functions

batchTasks - A generator function to process a list of task(longRunningProcess) in batch

sleep - Helper function to a mock long-running process

async function* batchTasks(tasks, limit) {

for (let i = 0; i < tasks.length; i = i + limit) {

const batch = tasks.slice(i, i + limit);

const result = await Promise.all(batch.map((task) => task()));

yield result;

}

}

const postIds = Array.from({ length: 10 }).map((e, idx) => idx);

const longRunningProcess = async (postId) => {

// Dummy sleep between 0 to 10 second to mimic long process

await sleep();

return postId;

};

const tasks = postIds.map((postId) => () => longRunningProcess(postId));

// console.log(tasks);

const CONCURRENT_PROCESS = 3;

(async function () {

for await (const batch of batchTasks(tasks, CONCURRENT_PROCESS)) {

//Do something with the processed batch

console.log("Processed batch", batch);

}

})();

/*

* Timeout function

*/

async function sleep() {

// Random timeout between 0 to 10 second

return new Promise((resolve) => {

const ms = Math.floor(Math.random() * 5000);

console.log(`process time ${~~(ms / 1000)} Sec`);

setTimeout(resolve, ms);

});

}

Boost batch processing through pooling

Batch processing groups a number of "n" tasks to be processed all at once, but it waits for all processes in the current batch to be completed before initiating the next batch.

Imagine you have ten batches, each with ten tasks. When the first batch runs, 10 tasks are processed concurrently. Let's assume three of them are completed too soon, while the remaining seven are still in progress.

If you want to select a new set of three tasks to run, we are still fine because we have enough room to do so. We certainly don't want to finish the remaining 7 to fill the room.

And this is where the Pool concept is useful, ensuring that 10 jobs are constantly in flight regardless of the status of the entire batch.

I have a separate article on how to take a batch process to the next level.